Updating GlorpSQLite for Pharo 8

I've started to update Glorp and GlorpSQLite for Pharo 8. This post lists the stuff to be handled.

Changes in Pharo

First, changes in Pharo from version to version. Glorp's TimedProxyReaper uses a

weak-valued dictionary to hold TimedProxy instances. In Pharo 6,

WeakValueDictionary>>at:put: essentially does the following:

WeakValueAssociation key: key value: anObject

In Pharo 7, that became:

WeakValueAssociation key: key value: anObject asSetElement

This required TimedProxy to implement #asSetElement.

In Pharo 8, #asSetElement is deprecated in favour of #asCollectionElement.

WeakValueAssociation key: key value: anObject asCollectionElement

So TimedProxy now also needs #asCollectionElement.

New SQLite Binding

The Pharo community has consolidated around Pharo-SQLite3 as the definitive SQLite binding going forward. GlorpSQLite uses the now-legacy UDBC-SQLite binding currently. This change should be straightforward.

Glorp Changes

Todd Blanchard has been working on Ruby on Rails-style ActiveRecord for Glorp, and testing the changes with PostgreSQL.

CI

With independently evolving drivers for SQLite, PostgreSQL and MySQL, and the ActiveRecord work changing Glorp itself, the time has come to set up CI for Glorp.

Tags: Glorp, OODB, SQLitePharo SQLite I18N Enhancements

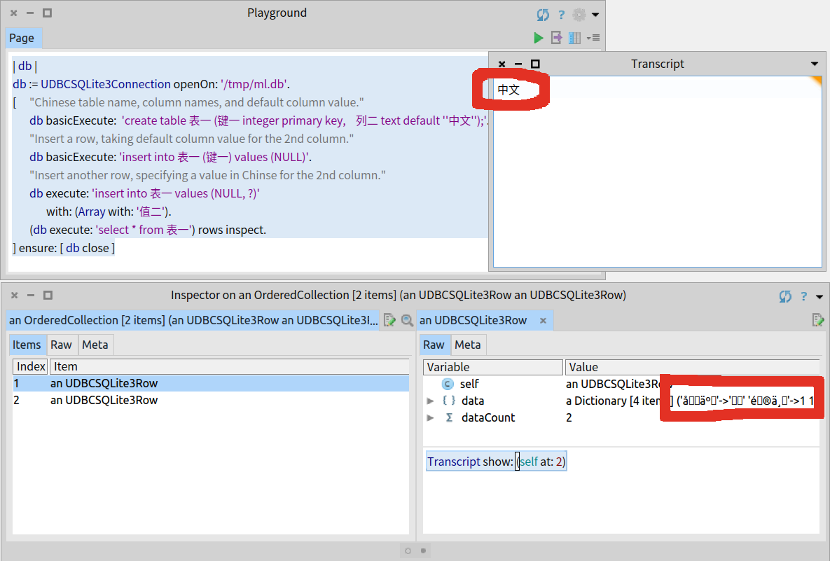

I've enhanced the Pharo SQLite library to be even more multilingual. It has always supported data elements that are Pharo WideString instances, these being converted to/from UTF8 transparently by the library. Now the library also handles multilingual table names, column names and default column values; in other words, multilingual SQL statements.

To install in Pharo 7, load GlorpSQLite from the Catalog Browser.

Example:

| db |

db := UDBCSQLite3Connection openOn: '/tmp/ml.db'.

[ "Chinese table name, column names, and default column value."

db basicExecute: 'create table 表一 (键一 integer primary key, 列二 text default ''中文'');'.

"Insert a row, taking default column value for the 2nd column."

db basicExecute: 'insert into 表一 (键一) values (NULL)'.

"Insert another row, specifying a value in Chinese for the 2nd column."

db execute: 'insert into 表一 values (NULL, ?)'

with: (Array with: '值二').

(db execute: 'select * from 表一') rows inspect.

] ensure: [ db close ]

Inspector shows that it isn't quite I18N, although Transcript is:

From the SQLite shell:

% sqlite3 /tmp/ml.db

SQLite version 3.27.2 2019-02-25 16:06:06

Enter ".help" for usage hints.

sqlite> .header on

sqlite> .schema

CREATE TABLE 表一 (键一 integer primary key, 列二 text default '中文');

sqlite> select * from 表一;

键一|列二

1|中文

2|值二

sqlite>

Testing and feedback welcome, especially on which other parts of the library needing internationalization.

Tags: SQLiteGlorp SQLite on Pharo 7

GlorpSQLite works on Pharo 7!

Take a fresh Pharo 7 alpha image; as of yesterday's download that is 5f13ae8. Launch it and run the following snippet in a Playground:

Metacello new

baseline: 'GlorpSQLite';

repository: 'github://PierceNg/glorp-sqlite3:pharo7dev';

load.

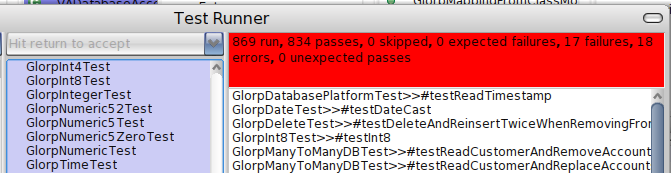

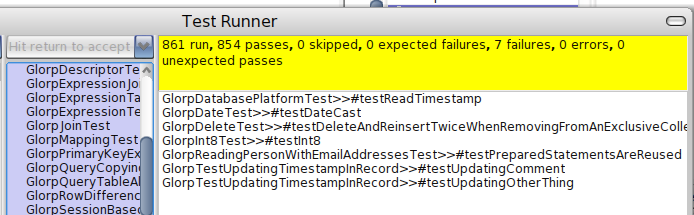

Run the Glorp tests in TestRunner. The result should be green, with all 891 tests passed and 12 tests skipped. The database file is sodbxtestu.db in your image directory. Tested on 32- and 64-bit Ubuntu 18.04.

Tags: Glorp, OODB, SQLiteGlorp Mapping Existing Schema - Part 2

This is the second post in a short series on the topic. The last post looked at the tables GROUPS and TEAMS in the OpenFootball relational database schema. There is also the table GROUPS_TEAMS, usually known as a link table, which, ahem, "relates" the GROUPS and TEAMS table. GROUPS_TEAMS has the following schema:

CREATE TABLE IF NOT EXISTS "groups_teams" (

"id" integer PRIMARY KEY AUTOINCREMENT NOT NULL,

"group_id" integer NOT NULL,

"team_id" integer NOT NULL,

"created_at" datetime NOT NULL,

"updated_at" datetime NOT NULL

);

A row in GROUPS_TEAMS with group_id of XXX and team_id of YYY means that the team represented by team_id YYY is in the group with group_id XXX.

Let's modify the Smalltalk class OFGroup to handle the linkage, by adding the inst-var 'teams' and creating accessors for it.

OFObject subclass: #OFGroup

instanceVariableNames: 'eventId title pos teams'

classVariableNames: ''

package: 'OpenFootball'

Next, modify the mapping for OFGroup in OFDescriptorSystem:

classModelForOFGroup: aClassModel

self virtualClassModelForOFObject: aClassModel.

aClassModel newAttributeNamed: #eventId type: Integer.

aClassModel newAttributeNamed: #title type: String.

aClassModel newAttributeNamed: #pos type: Integer.

"Next item is for linking OFGroup with OFTeam."

aClassModel newAttributeNamed: #teams collectionOf: OFTeam.

descriptorForOFGroup: aDescriptor

| t |

t := self tableNamed: 'GROUPS'.

aDescriptor table: t.

self virtualDescriptorForOFObject: aDescriptor with: t.

(aDescriptor newMapping: DirectMapping)

from: #eventId

type: Integer

to: (t fieldNamed: 'event_id').

(aDescriptor newMapping: DirectMapping)

from: #title

type: String

to: (t fieldNamed: 'title').

(aDescriptor newMapping: DirectMapping)

from: #pos

type: Integer

to: (t fieldNamed: 'pos'.

"Next item is for linking OFGroup with OFTeam."

(aDescriptor newMapping: ManyToManyMapping)

attributeName: #teams.

"No change to #tableForGROUPS:."

It is now necessary to add the table GROUPS_TEAMS to OFDescriptorSystem:

tableForGROUPS_TEAMS: aTable

| gid tid |

self virtualTableForOFObject: aTable.

gid := aTable createFieldNamed: 'group_id' type: platform integer.

aTable addForeignKeyFrom: gid to: ((self tableNamed: 'GROUPS') fieldNamed: 'id').

tid := aTable createFieldNamed: 'team_id' type: platform integer.

aTable addForeignKeyFrom: tid to: ((self tableNamed: 'TEAMS') fieldNamed: 'id').

Now let's fetch the OFGroup instances with their linked OFTeam instances.

| vh |

Transcript clear.

OFDatabase dbFileName: 'wc2018.db'

evaluate: [ :db |

db session accessor logging: true. "This shows the generated SQL."

vh := String streamContents: [ :str |

(db session read: OFGroup) do: [ :ea |

str nextPutAll: ea title; nextPut: Character cr.

ea teams do: [ :team |

str nextPutAll: '- ', team title; nextPut: Character cr ]]]].

vh

The above snippet produces the following output:

Group A

- Egypt

- Russia

- Saudi Arabia

- Uruguay

<some output omitted>

Group H

- Senegal

- Japan

- Poland

- Colombia

In the snippet, logging is enabled, and the SQL generated by Glorp is displayed in the Transcript (with whitespace inserted for readability). What we see is the infamous "N+1 selects problem" in action - the first SELECT fetches the GROUPS rows, then, for each group_id, there is a corresponding SELECT to fetch the TEAMS rows.

SELECT t1.id, t1.created_at, t1.updated_at, t1.event_id, t1.title, t1.pos

FROM GROUPS t1 an OrderedCollection()

SELECT t1.id, t1.created_at, t1.updated_at, t1.key, t1.title

FROM TEAMS t1, GROUPS_TEAMS t2

WHERE ((t2.team_id = t1.id) AND (t2.group_id = ?)) an OrderedCollection(1)

SELECT t1.id, t1.created_at, t1.updated_at, t1.key, t1.title

FROM TEAMS t1, GROUPS_TEAMS t2

WHERE ((t2.team_id = t1.id) AND (t2.group_id = ?)) an OrderedCollection(2)

SELECT t1.id, t1.created_at, t1.updated_at, t1.key, t1.title

FROM TEAMS t1, GROUPS_TEAMS t2

WHERE ((t2.team_id = t1.id) AND (t2.group_id = ?)) an OrderedCollection(3)

SELECT t1.id, t1.created_at, t1.updated_at, t1.key, t1.title

FROM TEAMS t1, GROUPS_TEAMS t2

WHERE ((t2.team_id = t1.id) AND (t2.group_id = ?)) an OrderedCollection(4)

SELECT t1.id, t1.created_at, t1.updated_at, t1.key, t1.title

FROM TEAMS t1, GROUPS_TEAMS t2

WHERE ((t2.team_id = t1.id) AND (t2.group_id = ?)) an OrderedCollection(5)

SELECT t1.id, t1.created_at, t1.updated_at, t1.key, t1.title

FROM TEAMS t1, GROUPS_TEAMS t2

WHERE ((t2.team_id = t1.id) AND (t2.group_id = ?)) an OrderedCollection(6)

SELECT t1.id, t1.created_at, t1.updated_at, t1.key, t1.title

FROM TEAMS t1, GROUPS_TEAMS t2

WHERE ((t2.team_id = t1.id) AND (t2.group_id = ?)) an OrderedCollection(7)

SELECT t1.id, t1.created_at, t1.updated_at, t1.key, t1.title

FROM TEAMS t1, GROUPS_TEAMS t2

WHERE ((t2.team_id = t1.id) AND (t2.group_id = ?)) an OrderedCollection(8)

Fortunately Glorp is cleverer than this, and provides a way to avoid the N+1 problem, by using the message #alsoFetch:.

| vh |

Transcript clear.

OFDatabase dbFileName: 'wc2018.db'

evaluate: [ :db |

| query |

db session accessor logging: true.

query := Query read: OFGroup.

query alsoFetch: [ :ea | ea teams ]. " <== See me. "

vh := String streamContents: [ :str |

(db session execute: query) do: [ :ea |

str nextPutAll: ea title; nextPut: Character cr.

ea teams do: [ :team |

str nextPutAll: '- ', team title; nextPut: Character cr ]]]].

vh

Same output as before, but this time the SQL (pretty-printed by hand for readability) is much shorter and properly takes advantage of the SQL language.

SELECT t1.id, t1.created_at, t1.updated_at, t1.event_id, t1.title, t1.pos,

t2.id, t2.created_at, t2.updated_at, t2.key, t2.title

FROM GROUPS t1

INNER JOIN GROUPS_TEAMS t3 ON (t1.id = t3.group_id)

INNER JOIN TEAMS t2 ON (t3.team_id = t2.id)

ORDER BY t1.id an OrderedCollection()

Glorp Mapping Existing Schema - Part 1

Using OpenFootball-Glorp for illustration, this post is the first in a series on mapping an existing normalized database schema and other fun Glorp stuff. As usual, I'm using SQLite for the database.

Consider the tables GROUPS and TEAMS.

CREATE TABLE IF NOT EXISTS "groups" (

"id" integer PRIMARY KEY AUTOINCREMENT NOT NULL,

"event_id" integer NOT NULL,

"title" varchar NOT NULL,

"pos" integer NOT NULL,

"created_at" datetime NOT NULL,

"updated_at" datetime NOT NULL

);

CREATE TABLE IF NOT EXISTS "teams" (

"id" integer PRIMARY KEY AUTOINCREMENT NOT NULL,

"key" varchar NOT NULL,

"title" varchar NOT NULL,

-- many other columns omitted for now --

"created_at" datetime NOT NULL,

"updated_at" datetime NOT NULL

);

As it happens, every table in OpenFootball has columns "id", "created_at" and "updated_at", where "id" is that table's primary key. Let's take advantage of Smalltalk's inheritance and class hierarchy to map these columns and tables:

Object subclass: #OFObject

instanceVariableNames: 'pid createdAt updatedAt'

classVariableNames: ''

package: 'OpenFootball'

"Maps to GROUPS."

OFObject subclass: #OFGroup

instanceVariableNames: 'eventId title pos'

classVariableNames: ''

package: 'OpenFootball'

"Maps to TEAMS."

OFObject subclass: #OFTeam

instanceVariableNames: 'key title'

classVariableNames: ''

package: 'OpenFootball'

By convention, the Glorp mapping is encapsulated in the class OFDescriptor, which has these supporting methods:

virtualClassModelForOFObject: aClassModel

aClassModel newAttributeNamed: #pid type: Integer.

aClassModel newAttributeNamed: #createdAt type: DateAndTime.

aClassModel newAttributeNamed: #updatedAt type: DateAndTime.

virtualDescriptorForOFObject: aDescriptor with: aTable

(aDescriptor newMapping: DirectMapping)

from: #pid

to: (aTable fieldNamed: 'id'). "This is the primary key mapping."

(aDescriptor newMapping: DirectMapping)

from: #createdAt

type: DateAndTime

to: (aTable fieldNamed: 'created_at').

(aDescriptor newMapping: DirectMapping)

from: #updatedAt

type: DateAndTime

to: (aTable fieldNamed: 'updated_at').

virtualTableForOFObject: aTable

(aTable createFieldNamed: 'id' type: platform serial) bePrimaryKey.

aTable createFieldNamed: 'created_at' type: platform datetime.

aTable createFieldNamed: 'updated_at' type: platform datetime.

The mapping for OFGroup is as follows:

classModelForOFGroup: aClassModel

self virtualClassModelForOFObject: aClassModel.

aClassModel newAttributeNamed: #eventId type: Integer.

aClassModel newAttributeNamed: #title type: String.

aClassModel newAttributeNamed: #pos type: Integer.

descriptorForOFGroup: aDescriptor

| t |

t := self tableNamed: 'GROUPS'.

aDescriptor table: t.

self virtualDescriptorForOFObject: aDescriptor with: t.

(aDescriptor newMapping: DirectMapping)

from: #eventId

type: Integer

to: (t fieldNamed: 'event_id').

(aDescriptor newMapping: DirectMapping)

from: #title

type: String

to: (t fieldNamed: 'title').

(aDescriptor newMapping: DirectMapping)

from: #pos

type: Integer

to: (t fieldNamed: 'pos'.

tableForGROUPS: aTable

self virtualTableForOFObject: aTable.

aTable createFieldNamed: 'event_id' type: platform integer.

aTable createFieldNamed: 'title' type: platform varchar.

aTable createFieldNamed: 'pos' type: platform integer.

The mapping for OFTeam is similar and I've not shown it here for brevity.

To round out the scene setting, OFDatabase, the "database interface" class, has class-side convenience methods to run snippets like so:

OFDatabase

dbFileName: 'wc2018.db'

evaluate: [ :db |

db session read: OFGroup ]

To be continued...

Tags: Glorp, SQLiteWorld Cup 2018 with Glorp

I last wrote about football.db in a Jul 2014 blog post. Four years have gone by, and the World Cup is here again. This time around, I've started building a Glorp descriptor system for the World Cup data.

football.db's data is described in YAML files which are assembled into SQLite databases using tools written in Ruby. From the constructed 2014 and 2018 World Cup databases, I've created SQL dumps and placed them in the repo. To get an SQLite database from the 2018 SQL dump file:

% sqlite3 wc2018.db < wc2018.sql

In its current state, footballdb-Glorp allows querying the initial World Cup first round group membership.

| login sess |

login := Login new

database: UDBCSQLite3Platform new;

host: '';

port: '';

username: '';

password: '';

databaseName: '/tmp/wc2018.db';

yourself.

sess := OFDescriptor sessionForLogin: login.

sess login.

[ Transcript clear.

(sess read: OFTournamentTeam) do: [ :ea |

Transcript show: ea group title , ' - ', ea team title; cr ]

] ensure: [ sess logout ].

This Glorp descriptor may not be completed in time for this World Cup which is starting in a few days, but will be in time for the next one for sure! :-) Load it thusly:

Metacello new

repository: 'github://PierceNg/footballdb-Glorp:master/repo';

baseline: 'OpenFootball';

load.

SQLite Boolean

From its documentation, SQLite does not have a separate Boolean storage class. Instead, Boolean values are stored as integers 0 (false) and 1 (true).

I've added Boolean handling to UDBC-SQLite. When writing to an SQLite database from Pharo, true is written as integer 1 and false as integer 0. SQLite uses dynamic typing, and any column in an SQLite database, except an INTEGER PRIMARY KEY column, may be used to store a value of any type, irrespective of the column's type declaration. As such, when writing Boolean values to a database, UDBC-SQLite does not check the database's type declaration.

When reading an SQLite database, UDBC-SQLite does check a column's type declaration: If a column is Boolean, UDBC-SQLite reads 1 as true, 0 as false, NULL as nil, and any other integer values raises an exception. I've encountered real world data where the string "t" means true and "f" means false for a Boolean column, so UDBC-SQLite handles these cases too.

Glorp has been similarly updated. Loading GlorpSQLite, from my development fork for now, installs both UDBC-SQLite and Glorp:

Metacello new

repository: 'github://PierceNg/glorp-sqlite3';

baseline: 'GlorpSQLite';

load.

All Glorp unit tests should pass. Tested on Linux using fresh 32- and 64-bit 60540 images.

Tags: Glorp, SQLiteSQLite Loadable Extensions

SQLite has the ability to load extensions at run-time. I've now implemented this functionality in UDBC-SQLite.

An SQLite extension is built as a .so/dylib/dll shared library file. Let's use SQLite's rot13 extension as our example. The source file rot13.c is located in the SQLite source code's ext/misc directory. To build the rot13 extension, also download the amalgamation. Unzip the amalgamation and copy rot13.c into its directory. Build the extension:

% gcc -fPIC -shared -o rot13.so -I. rot13.c

Verify that the extension works:

% sqlite3

SQLite version 3.22.0 2018-01-22 18:45:57

Enter ".help" for usage hints.

Connected to a transient in-memory database.

Use ".open FILENAME" to reopen on a persistent database.

sqlite> select rot13('qwerty');

Error: no such function: rot13

sqlite> .load "./rot13.so"

sqlite> select rot13('qwerty');

djregl

sqlite> select rot13('djregl');

qwerty

sqlite>

For use with Pharo, copy rot13.so into the Pharo VM directory where all the other .so files are.

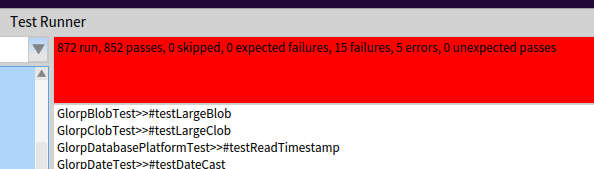

Next steps are done in Pharo. For the purpose of this blog post, I downloaded a fresh Pharo 60536 64-bit image. Start the image and install GlorpSQLite from the Catalog browser, which installs the latest UDBC-SQLite. (This also installs Glorp, of course. If you run Glorp's unit tests from Test Runner you should get all 890 tests passed.)

In a playground, run this snippet and Transcript should show, first, the text "no such function: rot13" and then the rot13 outputs.

| db rs r1 r2 |

Transcript clear.

db := UDBCSQLite3Connection openOn: ':memory:'.

[ [ db execute: 'select rot13(''qwerty'')' ]

on: UDBCSQLite3Error

do: [ ex: | Transcript show: ex messageText; cr ].

db enableExtensions; "<== New stuff."

loadExtension: 'rot13.so'. "<== New stuff."

rs := db execute: 'select rot13(''qwerty'') as r1'.

r1 := rs next at: 'r1'.

Transcript show: r1; cr.

rs := db execute: 'select rot13(?) as r2' with: (Array with: r1).

r2 := rs next at: 'r2'.

Transcript show: r2; cr.

] ensure: [ db close ]

Note the messages #enableExtensions and #loadExtension:. For security reasons, extension loading is disabled by default.

Tested on Linux latest Pharo 64-bit 60536.

Tags: SQLiteGlorp SQLite on Pharo 6

I've updated ConfigurationOfGlorp for Pharo 6 and added catalog methods to ConfigurationOfGlorpSQLite.

Take a fresh Pharo 60365 image, the latest as of yesterday's download. Launch it, open the Catalog Browser, and install GlorpSQLite from there.

Run the Glorp tests in TestRunner. The result should be green, with all 889 tests passed and 12 tests skipped. The database file is sodbxtestu.db in your image directory.

(On Pharo 5, Glorp runs and passes total of 953 tests. Something to look into.)

Tags: Glorp, OODB, SQLiteConfigurationOfGlorpSQLite

I've created ConfigurationOfGlorpSQLite on STH.

Take a fresh Pharo 5 image. Make a script like the following and run it on the image:

% cat loadGlorpSQLite.sh

#!/bin/sh

MCREPO=http://www.smalltalkhub.com/mc/DBXTalk/Glorp/main/

pharo $1.image config $MCREPO ConfigurationOfGlorpSQLite --install=stable

% ./loadGlorpSQLite.sh Pharo-50757

When done, fire up the image again, and run the Glorp tests in TestRunner. The result should be green, with all 953 tests passed and 12 tests skipped. The database file is sodbxtestu.db in your image directory.

Tags: Glorp, OODB, SQLiteGlorp-SQLite3 for Pharo 5

I'm pleased to announce the release of Glorp-SQLite3 for Pharo 5.

Developed and tested on Linux. Known working on Windows 7. Your Pharo 5 VM needs to be able to find libsqlite3.so or the Windows equivalent.

Take a fresh Pharo 5 image. Run the following:

Gofer it

smalltalkhubUser: 'TorstenBergmann' project: 'UDBC';

configuration;

load.

(Smalltalk at: #ConfigurationOfUDBC) loadBleedingEdge.

Gofer it

smalltalkhubUser: 'DBXTalk' project: 'Glorp';

configurationOf: 'Glorp';

load.

#ConfigurationOfGlorp asClass project stableVersion load.

Gofer it

smalltalkhubUser: 'DBXTalk' project: 'Glorp';

package: 'Glorp-SQLite3';

load.

GlorpSQLite3CIConfiguration new configureSqlite3.

GlorpDemoTablePopulatorResource invalidateSetup.

Run the Glorp tests in TestRunner. All tests should pass, with 12 tests skipped. The database file is sodbxtestu.db in your image directory.

Tags: Glorp, OODB, SQLiteSQLite and JSON

SQLite 3.9.0, released in Oct 2015, introduced the json1 extension that provides functions to manage JSON content stored in an SQLite database. The extension source code is included in the SQLite source amalgamation and is enabled by the -DSQLITE_ENABLE_JSON1 option.

The following assumes that the json1 functionality is available. As sample data, I use the stocks data set from jsonstudio.com. Here's what the wrapped first line of the JSON data file looks like partially:

{ "_id" : { "$oid" : "52853800bb1177ca391c17ff" }, "Ticker" : "A", "Profit

Margin" : 0.137, "Institutional Ownership" : 0.847, "EPS growth past 5

years" : 0.158, "Total Debt/Equity" : 0.5600000000000001, "Current Ratio" :

3, "Return on Assets" : 0.089, "Sector" : "Healthcare", "P/S" : 2.54,

"Change from Open" : -0.0148, "Performance (YTD)" : 0.2605, "Performance

(Week)" : 0.0031, "Quick Ratio" : 2.3, "Insider Transactions" : -0.1352,

To load the file into an SQLite database using NBSQLite3:

| jr db |

jr := (FileSystem / 'tmp' / 'stocks.json') asFileReference readStream.

jr ascii.

db := NBSQLite3Connection openOn: '/tmp/s.db'.

[ db execute: 'create table s (d json)'.

db execute: 'begin'.

[ jr atEnd ] whileFalse: [

db execute: 'insert into s values (json(?))' "<== Note."

with: (Array with: jr nextLine) ]

db execute: 'commit'.

] ensure: [ db close ]

jr close.

Note the invocation of the json() SQL function, which verifies that its argument is a valid JSON string and returns a minified version of that JSON string.

Let's inspect the created database with the sqlite3 shell tool:

$ wc -l stocks.json

6756 stocks.json

$ sqlite3 s.db

SQLite version 3.9.2 2015-11-02 18:31:45

Enter ".help" for usage hints.

sqlite> select count(*) from s;

6756

sqlite> .schema

CREATE TABLE s (d json);

sqlite>

The json_extract(X, P1, P2, ...) function extracts and returns one or more values from the JSON structure X:

sqlite> select json_extract(d, '$.Sector', '$.Company')

...> from s

...> where json_extract(d, '$.Ticker') = 'AAPL';

["Consumer Goods","Apple Inc."]

sqlite>

The json_each() table-valued function walks the JSON value provided as its argument and returns one row for each element. The schema for the table returned by json_each() is as follows:

CREATE TABLE json_tree(

key ANY, -- key for current element relative to its parent

value ANY, -- value for the current element

type TEXT, -- 'object','array','string','integer', etc.

atom ANY, -- value for primitive types, null for array & object

id INTEGER -- integer ID for this element

parent INTEGER, -- integer ID for the parent of this element

fullkey TEXT, -- full path describing the current element

path TEXT, -- path to the container of the current row

json JSON HIDDEN, -- 1st input parameter: the raw JSON

root TEXT HIDDEN -- 2nd input parameter: the PATH at which to start

);

Here's json_each() in action:

sqlite> select key, value

...> from s, json_each(s.d)

...> where json_extract(d, '$.Ticker') = 'AAPL'

...> limit 10;

_id|{"$oid":"52853800bb1177ca391c1809"}

Ticker|AAPL

Profit Margin|0.217

Institutional Ownership|0.621

EPS growth past 5 years|0.424

Total Debt/Equity|0.14

Current Ratio|1.7

Return on Assets|0.186

Sector|Consumer Goods

P/S|2.75

sqlite>

Let's run the same query in Pharo:

| q db v |

q := 'select key, value from s, json_each(s.d) where json_extract(d, "$.Ticker") = "AAPL" limit 10'.

db := NBSQLite3Connection openOn: '/tmp/s.db'.

[ (db execute: q) rows do: [ :r |

Transcript show: (r at: 'key') asString, ' = '.

v := r at: 'value'.

Transcript show: v asString, ' (', v class asString, ')'; cr ]

] ensure: [ db close ]

Transcript displays thusly. As we can see, SQLite and NBSQLite3 handle numerical values in the JSON data properly.

_id = {"$oid":"52853800bb1177ca391c1809"} (ByteString)

Ticker = AAPL (ByteString)

Profit Margin = 0.217 (Float)

Institutional Ownership = 0.621 (Float)

EPS growth past 5 years = 0.424 (Float)

Total Debt/Equity = 0.14 (Float)

Current Ratio = 1.7 (Float)

Return on Assets = 0.186 (Float)

Sector = Consumer Goods (ByteString)

P/S = 2.75 (Float)

Notice that the first row is well-formed JSON. If we use json_tree() instead of json_row(), that JSON structure will be returned as a key/value row as well.

Finally, let's read the first JSON record using NeoJSON:

| q db |

q := 'select json_extract(d, "$") as data from s limit 1'.

db := NBSQLite3Connection openOn: '/tmp/s.db'.

[ (NeoJSONReader on: ((db execute: q) rows first at: 'data') readStream)

next explore

] ensure: [ db close ].

This is powerful stuff. Well worth a look.

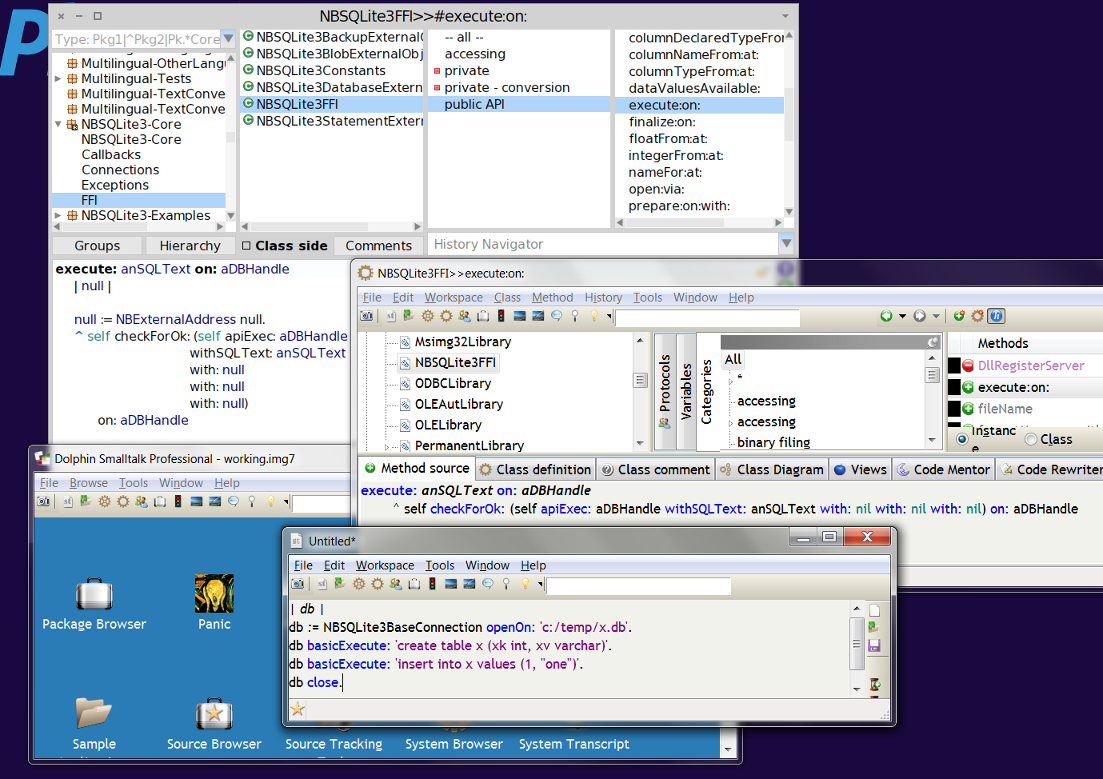

Tags: NoSQL, SQLiteNBSQLite3 on Dolphin Smalltalk 7

Dolphin Smalltalk 7 has been released as open source. Many thanks to Object Arts.

I spent an afternoon updating the mostly unused Windows partition on my laptop, installing various development tools, and playing with Dolphin 7. As a proof of concept, NBSQLite3 now runs on Dolphin 7. :-)

Happy new year!

Tags: Dolphin Smalltalk, SQLiteNBSQLite3 with SQLcipher

SQLcipher "is an open source extension to SQLite that provides transparent 256-bit AES encryption of database files." SQLcipher provides the same API as the SQLite Encryption Extension (SEE) by D Richard Hipp, the original developer of SQLite.

I've added SQLcipher/SEE's API to NBSQLite3. By convention, on Unix, SQLcipher produces a shared library named libsqlcipher.so, while the SQLite shared library is named libsqlite3.so. NBSQLite3 switches between the two libraries based on the messages #beSQLcipher and #beSQLite to the NBSQLite3FFI class.

Here's a demonstration code snippet using the keying pragma in SQL:

| dbf db rs row |

Transcript open; clear.

NBSQLite3FFI beSQLcipher.

dbf := FileReference newTempFilePrefix: 'cipher-' suffix: '.cdb'.

db := NBSQLite3Connection openOn: dbf fullName.

Transcript show: 'Creating an encrypted database with some data.'; cr.

[ db basicExecute: 'pragma key = "test"'.

db basicExecute: 'create table if not exists x (xk integer primary key, iv integer, tv text);'.

db beginTransaction.

[ rs := db execute: 'insert into x values (NULL, ?, ?)' with: #(1 'two') ] ensure: [ rs close ].

db commitTransaction

] ensure: [ db close ].

db := NBSQLite3Connection openOn: dbf fullName.

Transcript show: 'Opening the encrypted database.'; cr.

[ db basicExecute: 'pragma key = "test"'.

[ rs := db execute: 'select * from x'.

row := rs next.

Transcript show: (row at: 'xk'); cr.

Transcript show: (row at: 'iv'); cr.

Transcript show: (row at: 'tv'); cr.

] ensure: [ rs close ]

] ensure: [ db close ].

dbf delete.

NBSQLcipherExample class>>examplePragma contains a longer version of the above snippet that includes reopening the encrypted database file without the keying pragma and using the SQLite library.

Tested on Linux Mint. Code updated in Smalltalk Hub. Some refactoring to be expected, because the above snippet using the keying pragma is the only test I've done.

I've placed a copy of libsqlcipher.so here; it is built on my Linux Mint 17.x laptop from the source here, linking in LibreSSL 2.2.4's libcrypto.a statically.

For good measure, I've also put up a copy of sqlcipher built at the same time. It requires readline.

Tags: cryptography, security, SQLiteScripting with Pharo

A couple of links to set the scene, one from 2004, the other from 2005. Their titles relate to this post, although their content doesn't really.

While Pharo is an immersive interactive programming environment, it can also be used for operating system command line-oriented scripting. Take a freshly downloaded standard Pharo 4 image, here renamed p4cli.image:

% pharo -vm-display-null p4cli.image --list

Currently installed Command Line Handlers:

Fuel Loads fuel files

config Install and inspect Metacello Configurations from the command line

save Rename the image and changes file

update Load updates

printVersion Print image version

st Loads and executes .st source files

test A command line test runner

clean Run image cleanup

eval Directly evaluates passed in one line scripts

Let's see how to use NBSQLite3's online backup functionality to back up a live SQLite database through a Pharo script.

Firstly, install the current bleedingEdge version of NBSQLite3.

% pharo -vm-display-null p4cli.image config \

http://www.smalltalkhub.com/mc/Pharo/MetaRepoForPharo40/main \

ConfigurationOfNBSQLite3 --install=bleedingEdge

'Installing ConfigurationOfNBSQLite3 bleedingEdge'

Loading 1.2 of ConfigurationOfNBSQLite3...

Fetched -> NBSQLite3-Core-PierceNg.7 --- ...

...

...

...finished 1.2%

%

Now make a script of the backup code snippet seen in my previous blog post, changing the database file names. In this case, I am using the script to make a copy of my server's firewall log database, which is live and constantly updated by the logging daemon. The script is named 'sqlitebackup.st'.

| srcDB dstDB backup |

srcDB := NBSQLite3Connection openOn: '/var/log/ulog/ulogd.db'.

dstDB := NBSQLite3Connection openOn: '/tmp/ulogd-backup.db'.

[ backup := NBSQLite3Backup new.

srcDB asBackupSource: backup.

dstDB asBackupDestination: backup.

backup prepare.

[ [ backup completed ] whileFalse: [ backup step ] ] ensure: [ backup finish ]

] ensure: [ srcDB close. dstDB close. ]

Run the script under Unix "time":

% time pharo -vm-display-null p4cli.image st --quit sqlitebackup.st

pharo -vm-display-null p4cli.image st --quit sqlitebackup.st

0.26s user 0.10s system 53% cpu 0.675 total

% ls -l /var/log/ulog/ulogd.db* /tmp/*.db

-rw-r--r-- 1 pierce pierce 11636736 May 17 20:49 /tmp/ulogd-backup.db

-rw-r--r-- 1 ulog ulog 11643904 May 17 20:50 /var/log/ulog/ulogd.db

The database files aren't identical, because the logging daemon has written yet more data since the backup was taken.

Tags: scripting, SQLiteNBSQLite3 Online Backup

SQLite provides an online backup API that operates incrementally, so that the source database file is locked only when it is actually read from, allowing other applications to use the source database while the backup is in progress.

I've added the backup API to NBSQLite3. Here's a demonstration code snippet using OpenFootball's World Cup 2014 database:

| srcDB dstDB backup |

srcDB := NBSQLite3Connection openOn: '/tmp/wc2014.db'.

dstDB := NBSQLite3Connection openOn: '/tmp/backup.db'.

[ backup := NBSQLite3Backup new.

srcDB asBackupSource: backup.

dstDB asBackupDestination: backup.

backup prepare.

[ [ backup completed ] whileFalse: [ backup step ] ] ensure: [ backup finish ]

] ensure: [ srcDB close. dstDB close. ]

Let's look at the two databases:

% ls -l *.db

-rw-r--r-- 1 pierce pierce 1101824 May 16 10:58 backup.db

-rw-r--r-- 1 pierce pierce 1101824 Jul 22 2014 wc2014.db

% openssl md5 backup.db wc2014.db

MD5(backup.db)= 408494059b67fc3c7d39b672ce7e525e

MD5(wc2014.db)= 28ab0865e87920ca2f2b1f0de710c622

Ok, the two files have the same size, but aren't bit-wise identical. Now try sqldiff that comes with SQLite:

% sqldiff --help

Usage: sqldiff [options] DB1 DB2

Output SQL text that would transform DB1 into DB2.

% sqldiff wc2014.db backup.db

%

No output, meaning the two files are identical structurally and content-wise, according to sqldiff. Let's make sure:

% sqlite3 backup.db

sqlite> select count(*) from goals;

171

sqlite> select max(id) from goals;

171

sqlite> delete from goals where id > 168;

sqlite> select max(id) from goals;

168

sqlite> ^D

% sqldiff wc2014.db backup.db

DELETE FROM goals WHERE id=169;

DELETE FROM goals WHERE id=170;

DELETE FROM goals WHERE id=171;

The output shows that sqldiff and the SQLite library are in cahoots to make two database files appear identical even when they are not. Haha, no, I mean, the output gives us some assurance that the backup API operated as advertised and created a proper backup of the database.

Now, the demonstration code snippet is somewhat contrived, because it backed up a totally quiescent source database. Next step is to verify backing up a database in active use.

Code updated in Smalltalk Hub. Unit tests and ConfigurationOf update to come.

Tags: SQLiteNBSQLite3 Date Time Handling

SQLite does not support date/time as a built-in data type. Instead, SQLite provides date and time functions to deal with ISO 8601-format strings, floating point values representing Julian days, and Unix epoch time integers.

I've added date/time handling to NBSQLite3, so that storing a DateAndTime object results in an ISO 8601 string in the database, and reading such a string from the database, provided the column type is declared as DateTime, yields a DateAndTime object.

Here's an example using the low-level API:

| dt db stmt |

dt := DateAndTime year: 2015 month: 4 day: 1.

db := NBSQLite3BaseConnection openOn: ':memory:'.

[

db basicExecute: 'create table x (xv datetime)'.

db basicExecute: 'begin'.

stmt := db prepare: 'insert into x values (?)'.

stmt at: 1 putDateTime: dt.

stmt step. stmt finalize.

db basicExecute: 'commit'.

stmt := db prepare: 'select xv from x'.

stmt basicExecute: [ :row |

| v |

v := row dateTimeAt: 0.

Transcript show: v year asString; cr.

Transcript show: v month asString; cr.

Transcript show: v dayOfMonth asString; cr ].

stmt finalize.

] ensure: [ db close ]

And here's the example using the high-level API:

| dt db rs v |

dt := DateAndTime year: 2015 month: 4 day: 1.

db := NBSQLite3Connection openOn: ':memory:'.

[

db basicExecute: 'create table x (xv datetime)'.

db basicExecute: 'begin'.

db execute: 'insert into x values (?)' with: (Array with: dt).

db basicExecute: 'commit'.

rs := db execute: 'select xv from x'.

v := (rs next) at: 'xv'.

Transcript show: v class asString; cr.

Transcript show: v year asString; cr.

Transcript show: v month asString; cr.

Transcript show: v dayOfMonth asString; cr.

rs close

] ensure: [ db close ]

Glorp with NBSQLite3 on Pharo 4

I've integrated NBSQLite3 into Glorp on the current Pharo 4.0 v40592 beta image.

Firstly, install NBSQLite3 (TorstenBergmann.7) and then Glorp (TorstenBergmann.42) from the config browser.

Then, using the Monticello browser, open the NBSQLite3 repository and load the packages NBSQLite3-Glorp and NBSQLite3-Test-Glorp. Next, open the Glorp repository and load the packages Glorp-PierceNg.97.mcz and GlorpTests-PierceNg.44.mcz.

In a workspace/playground, execute the following:

GlorpDemoTablePopulatorResource invalidateSetup.

GlorpDatabaseLoginResource

defaultLogin: GlorpDatabaseLoginResource defaultNBSQLite3LocalLogin

Open Test Runner and run the Glorp tests.

Tested on Linux Mint 17 and OSX Mavericks. 2 fewer tests passed on Linux.

Curiously, #testLargeBlob, #testLargeClob and #testLargeText passed on the Pharo 3 image that I wrote this code on.

The database file created by the tests is sodbxtest.db.

% sqlite3 sodbxtest.db

sqlite> .tables

AIRLINE GALLERY_LINK PERISHABLE_ITEM

AIRLINE_MEAL GLORP_IMAGE PERSON

ATTACHMENT GLORP_IMAGE_FILE POULTRY

ATTACHMENTBYTES GLORP_JOB PUBLISHER_EMP

BANK_ACCT GLORP_OWNER PUBLISHER_TITLE

BANK_TRANS GLORP_SLAVE PUBLISHER_TITLE2

BOOK GLORP_TAG PUB_EMP_LINK

BOOK_CUSTOMER GLORP_TAGS PUB_TITLES_STOCK

COMPRESSED_MONEY_TABLE GLORP_WORKER PUB_TITLE_LINK

CUSTOMER_ACCT_LINK GLORP_WORKER_JOB_LINK RECORD_WITH_UPDATE

CUSTOMER_BOOK_LINK GR_ADDRESS RESERVATION

DEFAULTABLE_THING GR_CUSTOMER STUFF

DOCUMENT GR_FOLDER TAX

EMAIL_ADDRESS GR_MESSAGE TRANSFORMED_TIME

EMPLOYEE GR_PUBLISHER TREE_NODE

ENCYC GR_THINGONE TREE_NODE_LINK

ENCYC_BIO GR_THINGWITHCOLLECTIONS UNASSEMBLED_ITEM

ENCYC_ENTRY GR_THING_LINK VIDEO_CREDIT_STATUS

ENCYC_ENTRY_LINK GR_TRAVEL_AGENT VIDEO_PURCHASE

FKADDRESS GR_USER VIDEO_PURCHASE_LINK

FKCONTACT IMAGETAGS VIDEO_RENTAL

FLIGHT ITINERARY VIDEO_STORE

FLIGHT_PASS NONPERISHABLE_ITEM WAREHOUSE

FREQUENT_FLYER OFFICE WAREHOUSE_ITEM_LINK

GALLERY PASSENGER WORKING_STIFF

sqlite>

NBSQLite3 on STH

Torsten Bergmann refactored NBSQLite3 and moved it to PharoExtras on SmalltalkHub. Here's the announcement to Pharo-Dev.

Thanks Torsten!

Tags: smalltalkhub, SQLiteGlorp with NBSQLite3

Made really good progress with NBSQLite3 for Glorp.

On the failed tests:

The entire GlorpOptimisticLockingTest and GlorpTimestampTest suites are skipped, because some of the tests fail, and foobars the Pharo-SQLite interface, causing many subsequent tests to fail, requiring restart of the Pharo image. Still need to look into these.

Tags: Glorp, OODB, SQLiteGlorp with NBSQLite3

Glorp with NBSQLite3

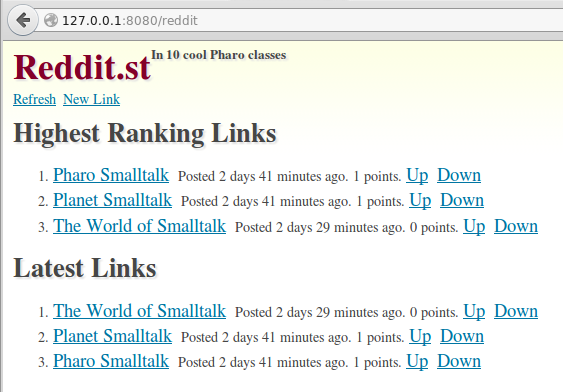

Sven van Caekenberghe has written a very nice tutorial implementing a Reddit clone in Pharo using Seaside, Glorp and PostgreSQL. Sven also makes available a prebuilt image containing the application.

Seeing that the image contains Glorp working with the PostgresV2 driver, I set about integrating NBSQLite3 with Glorp. After about an afternoon's work, I now have Reddit.st working with Glorp+NBSQLite3.

$ sqlite3 reddit.db

SQLite version 3.8.2 2013-12-06 14:53:30

Enter ".help" for instructions

Enter SQL statements terminated with a ";"

sqlite> select * from reddit_links;

1|http://www.pharo.org|Pharo Smalltalk|2014-09-22 22:46:53|1

2|http://planet.smalltalk.org|Planet Smalltalk|2014-09-22 22:47:18|1

6|http://www.world.st/|The World of Smalltalk|2014-09-22 22:58:50|0

sqlite>

There is still much to be done to get Glorp fully working with NBSQLite3: Some tests apparently expect Glorp proxies, but are getting OrderedCollections, and one particular test rendered my Linux X session non-responsive to mouse and keyboard, except for screen brightness key chords!

Tags: Glorp, OODB, SQLiteNBSQLite3 - Callback from C to Pharo Smalltalk

NBSQLite3 now does callback from C to Smalltalk. As a start, the tracing API, which "is invoked at various times when an SQL statement is being run".

| db cb tk s |

db := NBSQLite3Connection openOn: ':memory:'.

[ cb := NBSQLite3TraceCallback on: [ :appdata :sqlText |

Transcript show: 'Trace #', appdata readString, ': '.

Transcript show: sqlText; cr ].

tk := NBExternalAddress fromString: '42'.

db traceUsing: cb with: tk.

db basicExecute: 'create table x (xk integer, xv integer, tv text)'.

s := db prepare: 'insert into x values (NULL, ?, ?)'.

1 to: 10 do: [ :x |

s at: 1 putInteger: x * x.

s at: 2 putString: x asString, ' * ', x asString.

s step. s clearBindings. s reset. ].

s finalize.

db basicExecute: 'drop table x'.

tk finalize

] ensure: [ db close ]

The Transcript output looks thusly:

Trace #42: create table x (xk integer, xv integer, tv text)

Trace #42: insert into x values (NULL, 1, '1 * 1')

Trace #42: insert into x values (NULL, 4, '2 * 2')

Trace #42: insert into x values (NULL, 9, '3 * 3')

Trace #42: insert into x values (NULL, 16, '4 * 4')

Trace #42: insert into x values (NULL, 25, '5 * 5')

Trace #42: insert into x values (NULL, 36, '6 * 6')

Trace #42: insert into x values (NULL, 49, '7 * 7')

Trace #42: insert into x values (NULL, 64, '8 * 8')

Trace #42: insert into x values (NULL, 81, '9 * 9')

Trace #42: insert into x values (NULL, 100, '10 * 10')

Trace #42: drop table x

Test incorporated and passes on OSX Mountain Lion and Linux Mint 17 for Pharo 3. Get the source on SS3.

Tags: SQLiteROEing

I've been playing with the OpenFootball data using ROE. ROE provides RAArrayRelation, which shares a common superclass with RASQLRelation. As its name implies, RAArrayRelation uses an in-memory array object and does not involve any underlying SQL database.

In my OpenFootball data crunching, I tried JOINing instances of both. When the left side of the JOIN is the RASQLiteRelation instance, the operation threw an SQLite exception "no such table" because ROE expected the right side to also be an RASQLiteRelation on the same underlying database. When the left side is the RAArrayRelation instance, the operation sends #tuples to the right side, which resulted in a DNU. I've tried a few implementations of RASQLiteRelation>>tuples, but so far no success. Even if I get a working implementation, the implication of #tuples is that all matching rows from the SQLite database are loaded into memory, which is not always performant or feasible.

The reason for using an RAArrayRelation is to create a new relation unlike any existing one. The alternative is to create an RASQLiteRelation instance on a new, empty table. However, ROE does not support creating tables in the underlying database. Of course, I can use the given database connection to CREATE TABLE via direct SQL, but that doesn't feel satisfying.

Tags: ROE, SQLiteCommitted ROE with NBSQLite3 to STH

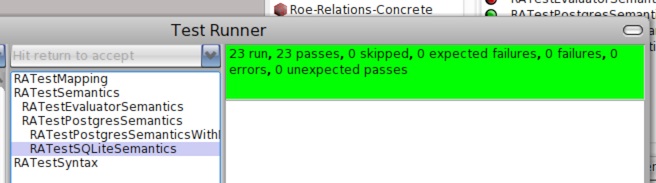

I've committed my integration of ROE with NBSQLite3 to SmalltalkHub.

Tested on Pharo 3 on OSX Mountain Lion and Linux Mint 17 based on Ubuntu 14.04. All 23 tests of RATestSQLiteSemantics pass.

Tags: ROE, smalltalkhub, SQLiteWorld Cup 2014 with ROE

football.db is a "free open public domain football (soccer) database". Instructions to construct an SQLite data file from the data are on the website. The database consists of 40 tables; its schema looks normalized. With the World Cup 2014 and dependent data, the file weighs in at ~1MB.

Let's see what we can do with the data using ROE.

Each league/tournament is given an event ID; the World Cup's event_id is 8.

| conn groups teams wcTeams |

conn := NBSQLite3BaseConnection on: ('/home/pierce/data/openfootball/sport.db').

conn open.

groups := (((RASQLiteRelation name: 'groups' connection: conn)

where: #event_id equals: 8)

projectAll: #(id title))

renameAll: #(id title) to: #(gid gTitle).

teams := (RASQLiteRelation name: 'teams' connection: conn)

renameAll: #(id title) to: #(tid tTitle).

wcTeams := ((RASQLiteRelation name: 'groups_teams' connection: conn) * groups

whereEqual: #(group_id gid)).

((wcTeams * teams whereEqual: #(team_id tid))

projectAll: #(gTitle tTitle)) explore.

The above code snippet results in a RAProjection instance. We can see its content by the following (pretty-printed by hand):

(self collect: [ :ea | ea values asArray ]) asArray

#(#('Group A' 'Brazil')

#('Group A' 'Croatia')

#('Group A' 'Mexico')

#('Group A' 'Cameroon')

#('Group B' 'Spain')

#('Group B' 'Netherlands')

#('Group B' 'Chile')

#('Group B' 'Australia')

#('Group C' 'Colombia')

#('Group C' 'Greece')

#('Group C' 'Côte d''Ivoire')

#('Group C' 'Japan')

#('Group D' 'Uruguay')

#('Group D' 'Costa Rica')

#('Group D' 'England')

#('Group D' 'Italy')

#('Group E' 'Switzerland')

#('Group E' 'Ecuador')

#('Group E' 'France')

#('Group E' 'Honduras')

#('Group F' 'Argentina')

#('Group F' 'Bosnia-Herzegovina')

#('Group F' 'Iran')

#('Group F' 'Nigeria')

#('Group G' 'Germany')

#('Group G' 'Portugal')

#('Group G' 'Ghana')

#('Group G' 'United States')

#('Group H' 'Belgium')

#('Group H' 'Algeria')

#('Group H' 'Russia')

#('Group H' 'South Korea'))

(Ivory Coast's proper name in the output doesn't look right in Pharo. The SQLite database is correct though.)

Tags: ROE, SQLiteNBSQLite3

I've started a page on NBSQLite3. Comments welcome.

Tags: SQLiteROE with NBSQLite3

ROE is "a library for manipulating relational queries as first class Smalltalk expressions, and generating SQL from them."

Not bad for an afternoon's hacking, now ROE works with NBSQLite3 and all tests pass.

(Yes, I notice these screenshots are of rather poor quality.)

Tags: ROE, SQLiteNBSQLite3 - NativeBoost SQLite for Pharo

I like SQLite. SQLite is small, fast and reliable. As mentioned in this old blog post, I was extending the Squeaksource SQLite FFI wrapper to support parameter binding. Since then, Torsten Bergmann has also picked it up and now tends to it on SmalltalkHub.

Taking the chance to learn NativeBoost, with hints from Masashi Umezawa's PunQLite, I took my SQLite FFI changes and created NBSQLite3. NBSQLite3's small test suite runs successfully on Pharo 2.0 (OSX, Linux) and the current Pharo 3.0 beta on OSX.

Here's a multithreading example:

| db db2 sem blocking blocked |

db := NBSQLite3BaseConnection on: 'file::memory:?cache=shared'.

db2 := NBSQLite3BaseConnection on: 'file::memory:?cache=shared'.

sem := Semaphore new.

Transcript open; clear.

blocking := [ :x :sema |

Transcript show: 'Thread-1: begin exclusive...'; cr; flush.

[ x open.

x execute: 'begin exclusive'.

sema wait.

Transcript show: 'Thread-1: releasing exclusive lock.'; cr; flush.

x execute: 'rollback'

] ensure: [ x close ]

] newProcessWith: (Array with: db with: sem).

blocking resume.

Processor yield.

blocked := [ :x |

Transcript show: 'Thread-2: create table ...'; cr; flush.

[[ x open. x execute: 'create table if not exists x (xk integer)' ]

on: NBSQLite3Locked do: [ :ex | Transcript show: ex messageText; cr; flush ]

] ensure: [ x close ]

] newProcessWith: (Array with: db2).

blocked resume.

Processor yield.

sem signal.

To run this example, evaluate "NBSQLite3Example multithreading". The output looks thusly:

Thread-1: begin exclusive...

Thread-2: create table ...

database schema is locked: main

Thread-1: releasing exclusive lock.

The SQLite shared library is compiled with -DSQLITE_USE_URI=1 and -DSQLITE_THREADSAFE=2.

Tags: SQLiteStackOverflow SQLite Full Text Search

In the previous entry, I wrote about parsing the StackOverflow XML dump files.

This entry is about setting up SQLite full text search for the StackOverflow posts data. In Smalltalk, set up the database schema:

| schema db |

schema := 'create table posts (Id integer primary key, ...)'.

db := SQLiteConnection fileNamed: 'so.db'.

db open.

db executeQuery: schema.

db close.

The key SAX parsing callback method becomes, in essence, as follows:

startElement: aQualifiedName attributes: aDict

| stmt |

"db is the instance variable with the database connection."

stmt := db prepare: 'insert into posts values (?,...,?)'.

aQualifiedName = 'row' ifTrue: [

stmt at: 1 putInteger: (aDict at: 'Id') asInteger.

...

stmt at: 20 putInteger: (aDict at: 'FavoriteCount' ifAbsent: [-1]) asInteger.

stmt step. stmt clearBindings. stmt reset ].

stmt finalize.

On my computer, running through the 6+ million posts took about an hour. The resultant SQLite database file is about 6.5GB in size. Here are the comparative file sizes:

-rw-r--r-- 1 pierce staff 7569879502 Sep 7 2011 posts.xml

-rw-r--r-- 1 pierce staff 6819280896 Nov 24 20:31 so.db

Next, let's set up full text search on this database:

% sqlite3 so.db

sqlite> create virtual table sposts using fts4 (id, body, title);

sqlite> insert into sposts select id, body, title from posts;

sqlite> create virtual table stags using fts4 (id, tags);

sqlite> insert into stags select id, tags from posts;

sqlite>

I didn't time how long the INSERTs took. Setting up full text search boosted the database's file size:

-rw-r--r-- 1 pierce staff 6819280896 Nov 24 20:31 so.db (before FTS)

-rw-r--r-- 1 pierce staff 16491300864 Nov 25 21:34 so.db (after FTS)

Now search the database using the sqlite3 shell tool:

% sqlite3 so.db

sqlite> select count(*) from stags where tags match 'smalltalk';

332

sqlite> select max(id) from stags where tags match 'smalltalk';

7260027

sqlite> select body, tags from posts where id = 7260027;

<p>what's the best server side programming language for web development

if we keep very good performance and a lot of database interaction of

the script with server in mind. i am already working on a project with

php, i'm fearing that my application might have a lot of traffic, i

just want to use only the server side language which is best in terms

of performance, and please also guide me any major step i should take

to prevent my application from crashing when it will face a huge

traffic, what major step i might need to take to stop myself crying

after launching my applicaition, i heard that twitter use ruby, how's

ruby's performance? my concern is only and only performance and

database interaction, thanks in advance.</p>

|<php><python><ruby-on-rails><ruby><smalltalk>

sqlite>

SQLite Parameter Binding

I like SQLite. SQLite is small, fast and reliable. There is an SQLite FFI wrapper on Squeaksource which is very simple to use. Let's start with this database:

$ sqlite3 /tmp/test.db

SQLite version 3.7.6

Enter ".help" for instructions

Enter SQL statements terminated with a ";"

sqlite> create table a (k integer primary key, tv text, iv integer);

sqlite> insert into a values (1, 'wah wah wah', 7);

sqlite> insert into a values (2, 'quick brown fox', 42);

Run the following snippet in a workspace:

| db |

Transcript clear.

db := SQLiteConnection fileNamed: '/tmp/test.db'.

db withResultForQuery: 'select * from a;' do: [ :result |

result do: [ :row | Transcript show: row asString; cr ]].

db close.

The output is shown in the Transcript thus:

a Dictionary('iv'->'7' 'k'->'1' 'tv'->'wah wah wah' )

a Dictionary('iv'->'42' 'k'->'2' 'tv'->'quick browm fox' )

The underlying SQLite API used by withResultForQuery:do: is sqlite3_get_table, which is "a legacy interface preserved for backwards compatibility". Using this API, at the C level, NULL values are NULL pointers, and all other values are in their UTF-8 zero-terminated string representation. Not very interesting at the Smalltalk level, either, as seen from the Transcript.

SQLite also supports "prepared statements", aka "parameter binding". A prepared statement is compiled once (using sqlite3_prepare, into an SQLite VDBE virtual machine program), then executed multiple times (using sqlite3_step). Parameters are bound using sqlite3_bind_XXX calls, and values are retrieved using sqlite3_column_XXX calls. Parameter binding defends against SQL injection attacks.

I'm extending the SQLite FFI wrapper to support some of the parameter binding APIs. (Well, until enough to do my stuff.) Here's an example code snippet:

| db stmt |

Transcript clear.

db := SQLiteConnection fileNamed: '/tmp/test.db'.

db open.

[ stmt := db prepare: 'select * from a;'.

stmt isNil ifFalse: [

| value |

stmt execute: [ :row |

value := row stringAt: 1.

Transcript show: value, ' (', value class asString, ')'; cr.

value := row integerAt: 2.

Transcript show: value asString, ' (', value class asString, ')'; cr ].

stmt finalize ]]

ensure: [ db close ]

The Transcript displays the following:

wah wah wah (ByteString)

7 (SmallInteger)

the quick brown fox (ByteString)

42 (SmallInteger)